Syntel Interview

Date - 14/04/2018

Post - ETL Developer(Informatica)

There were 2 rounds of interview.

L1 and L2 followed by HR duscussion .

L1 round - Few of the technical Questions asked -

1. Draw informatica architecture and explain the same

2. Transaction Control -explain with example

3. Can we cantrol the way target are loaded in mapping and how --> Question was regarding target load plan option present in informatica.

4.what are nodes, Grid and why do we use with greed ?

5. 2 sources present say A and B having employeeid as common attribute . Scenario is to obtain below 3 results -

Target1 - records matching in both of them.

Target2 - all those records which are present in A and not present in B

Target3 - all those records which are present in B and not present in A

6. Write a shell script which will below file validations -

1.Check the file name

2.Check the file extension

3.check the file delimiter

4.check the file header

Send a mail if any of above fails.

7. Few questions on grep and cut comands

L2 was more of mangerial round.

Basic questions. Not much technical.

Hope these questions help you to be prepared. Do let me know if u need answers to these questions.

😊😊

Date - 14/04/2018

Post - ETL Developer(Informatica)

There were 2 rounds of interview.

L1 and L2 followed by HR duscussion .

L1 round - Few of the technical Questions asked -

1. Draw informatica architecture and explain the same

2. Transaction Control -explain with example

3. Can we cantrol the way target are loaded in mapping and how --> Question was regarding target load plan option present in informatica.

4.what are nodes, Grid and why do we use with greed ?

5. 2 sources present say A and B having employeeid as common attribute . Scenario is to obtain below 3 results -

Target1 - records matching in both of them.

Target2 - all those records which are present in A and not present in B

Target3 - all those records which are present in B and not present in A

6. Write a shell script which will below file validations -

1.Check the file name

2.Check the file extension

3.check the file delimiter

4.check the file header

Send a mail if any of above fails.

7. Few questions on grep and cut comands

L2 was more of mangerial round.

Basic questions. Not much technical.

Hope these questions help you to be prepared. Do let me know if u need answers to these questions.

😊😊

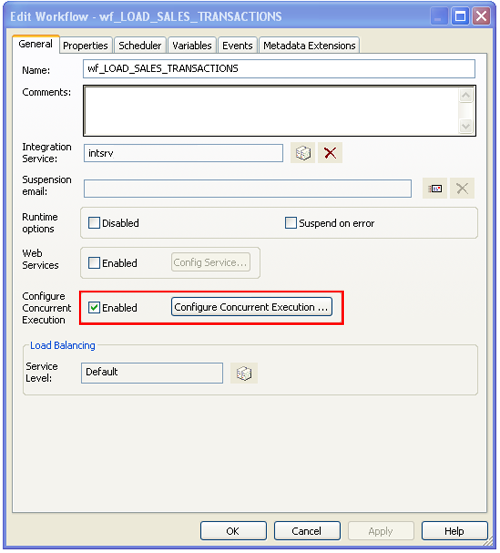

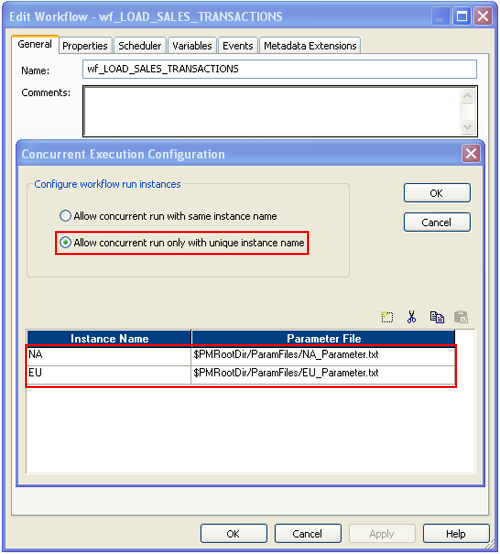

Choose the workflow instance name from the pop up window and click OK to run the selected workflow instance.

Choose the workflow instance name from the pop up window and click OK to run the selected workflow instance.